At Qure, we regularly work on segmentation and object detection problems and we were therefore interested in reviewing the current state of the art.

Back

In this post, I review the literature on semantic segmentation. Most research on semantic segmentation use natural/real world image datasets.Although the results are not directly applicable to medical images, I review these papers because research on the natural images is much more mature than that of medical images.

Post is organized as follows: I first explain the semantic segmentation problem, give an overview of the approaches and summarize a few interesting papers.

In a later post, I’ll explain why medical images are different from natural images and examine how the approaches from this review fare on a dataset representative of medical images.

What exactly is semantic segmentation?

Semantic segmentation is understanding an image at pixel level i.e., we want to assign each pixel in the image an object class.

Apart from recognizing the bike and the person riding it, we also have to delineate the boundaries of each object. Therefore, unlike classification, we need dense pixel-wise predictions from our models.

VOC2012 and MSCOCO are the most important datasets for semantic segmentation.

What are the different approaches?

Before deep learning took over computer vision, people used approaches like TextonForest and Random Forest based classifiers for semantic segmentation. As with image classification, convolutional neural networks (CNN) have had enormous success on segmentation problems.

One of the popular initial deep learning approaches was patch classification where each pixel was separately classified into classes using a patch of image around it. Main reason to use patches was that classification networks usually have full connected layers and therefore required fixed size images.

In 2014, Fully Convolutional Networks (FCN) by Long et al. from Berkeley, popularized CNN architectures for dense predictions without any fully connected layers. This allowed segmentation maps to be generated for image of any size and was also much faster compared to the patch classification approach. Almost all the subsequent state of the art approaches on semantic segmentation adopted this paradigm.

Apart from fully connected layers, one of the main problems with using CNNs for segmentation is pooling layers. Pooling layers increase the field of view and are able to aggregate the context while discarding the ‘where’ information. However, semantic segmentation requires the exact alignment of class maps and thus, needs the ‘where’ information to be preserved. Two different classes of architectures evolved in the literature to tackle this issue.

First one is encoder-decoder architecture. Encoder gradually reduces the spatial dimension with pooling layers and decoder gradually recovers the object details and spatial dimension. There are usually shortcut connections from encoder to decoder to help decoder recover the object details better. U-Net is a popular architecture from this class.

Conditional Random Field (CRF) postprocessing are usually used to improve the segmentation. CRFs are graphical models which ‘smooth’ segmentation based on the underlying image intensities. They work based on the observation that similar intensity pixels tend to be labeled as the same class. CRFs can boost scores by 1-2%.

In the next section, I’ll summarize a few papers that represent the evolution of segmentation architectures starting from FCN. All these architectures are benchmarked on VOC2012 evaluation server.

Summaries

Following papers are summarized (in chronological order):

- FCN

- SegNet

- Dilated Convolutions

- DeepLab (v1 & v2)

- RefineNet

- PSPNet

- Large Kernel Matters

- DeepLab v3

For each of these papers, I list down their key contributions and explain them. I also show their benchmark scores (mean IOU) on VOC2012 test dataset.

FCN

- Fully Convolutional Networks for Semantic Segmentation

- Submitted on 14 Nov 2014

- Arxiv Link

Key Contributions:

- Popularize the use of end to end convolutional networks for semantic segmentation

- Re-purpose imagenet pretrained networks for segmentation

- Upsample using deconvolutional layers

- Introduce skip connections to improve over the coarseness of upsampling

Explanation:

Key observation is that fully connected layers in classification networks can be viewed as convolutions with kernels that cover their entire input regions.This is equivalent to evaluating the original classification network on overlapping input patches but is much more efficient because computation is shared over the overlapping regions of patches. Although this observation is not unique to this paper (see overfeat, this post), it improved the state of the art on VOC2012 significantly.

After convolutionalizing fully connected layers in a imagenet pretrained network like VGG, feature maps still need to be upsampled because of pooling operations in CNNs. Instead of using simple bilinear interpolation, deconvolutional layers can learn the interpolation. This layer is also known as upconvolution, full convolution, transposed convolution or fractionally-strided convolution.

However, upsampling (even with deconvolutional layers) produces coarse segmentation maps because of loss of information during pooling. Therefore, shortcut/skip connections are introduced from higher resolution feature maps.

Benchmarks (VOC2012):

ScoreCommentSource62.2–leaderboard 67.2More momentum. Not described in paper leaderboard

My Comments:

- This was an important contribution but state of the art has improved a lot by now though.

SegNet

- SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

- Submitted on 2 Nov 2015

- Arxiv Link

Key Contributions:

- Maxpooling indices transferred to decoder to improve the segmentation resolution.

Explanation:

FCN, despite upconvolutional layers and a few shortcut connections produces coarse segmentation maps. Therefore, more shortcut connections are introduced. However, instead of copying the encoder features as in FCN, indices from maxpooling are copied. This makes SegNet more memory efficient than FCN.

Benchmarks (VOC2012):

ScoreCommentSource59.9–leaderboard

My comments:

- FCN and SegNet are one of the first encoder-decoder architectures.

- Benchmarks for SegNet are not good enough to be used anymore.

Dilated Convolutions

- Multi-Scale Context Aggregation by Dilated Convolutions

- Submitted on 23 Nov 2015

- Arxiv Link

Key Contributions:

- Use dilated convolutions, a convolutional layer for dense predictions.

- Propose ‘context module’ which uses dilated convolutions for multi scale aggregation.

Explanation:

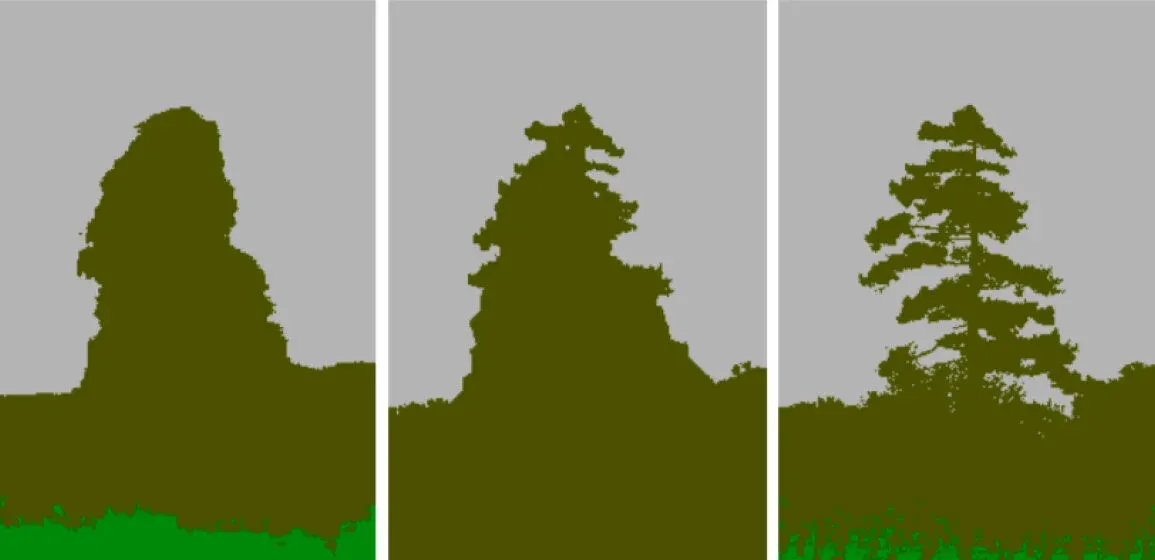

Pooling helps in classification networks because receptive field increases. But this is not the best thing to do for segmentation because pooling decreases the resolution. Therefore, authors use dilated convolution layer which works like this: